Although not very popular to undertake, and sometimes outright controversial, benchmarks and benchmarking is often very useful in order to be able to make fair and informed decisions about software codes, libraries, and choice of programming languages.

With this in mind, several Finite Element Analysis (FEA) simulation codes and their implementations are here compared using the fundamental sparse matrix vector multiplication, finite element matrix assembly, and linear solver operations. The following five different simulation codes are evaluated

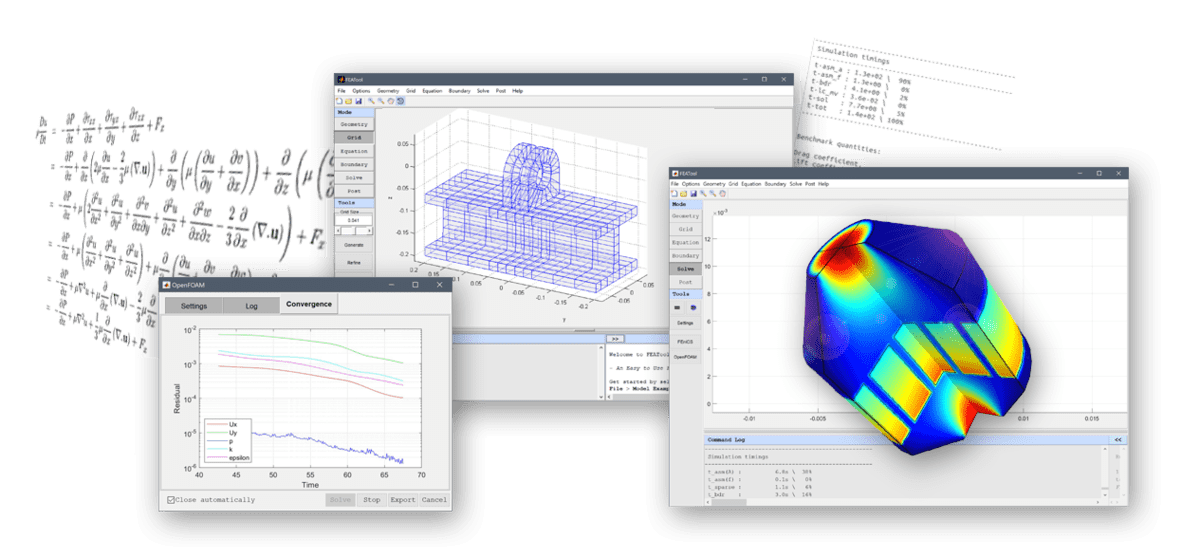

FEATool Multiphysics - Finite Element Analysis Toolbox entirely written in vectorized and optimized MATLAB m-script code

FEM2D - Finite Element library and reference implementation in Fortran 77

FEniCS Project - computing platform for solving general partial differential equations (PDE) with Python and C++

Julia FEM - basic Finite Element implementation in the Julia language ( Julia is a programming language that aims to solve the one-language problem, that is high-performance while at the same time being interpreted and easy to work with)

SFEA (Stencil based Finite Element Analysis) - a high-performance stencil based finite element method (FEM) solver written in Fortran 90 (this solver comes very close to the memory bandwidth limit and therefore gives an indication of the upper performance limit)

All test and timings were performed on a typical workstation system with an Intel Core i7-3820 CPU running Linux in single threaded/serial mode. The Fortran codes were compiled with the Intel Fortran compiler.

Test 1 - Sparse Matrix Vector Product

| 1/h | FEATool | FEM2D | Julia FEM | SFEA |

|---|---|---|---|---|

| MATLAB | Fortran | Julia | Fortran | |

| 128 | 0.002 | 0 | 0.03 | 0 |

| 256 | 0.002 | 0.001 | 0.031 | 0 |

| 512 | 0.006 | 0.005 | 0.034 | 0.001 |

| 1024 | 0.021 | 0.024 | 0.05 | 0.002 |

| 2048 | 0.085 | 0.197 | 0.08 | 0.009 |

| 4096 | 0.411 | 0.25 | 0.034 |

For the sparse matrix vector product benchmark test we can see that FEM2D (CSR sparse format), FEATool (MATLAB/triplet sparse format), and Julia (CSC sparse format) perform similarly especially for moderate grid sizes. The stencil based SFEA approach is about a magnitude faster which is to be expected since memory access is near optimal. FEniCS did not support matrix and vector operations on a high level at the time of testing, so no data is included for FEniCS here.

Test 2 - Finite Element Matrix Assembly

| 1/h | FEATool | FEM2D | FEniCS | Julia FEM |

|---|---|---|---|---|

| MATLAB | Fortran | Python/C++ | Julia | |

| 128 | 0.05 | 0.05 | 0.05 | 0.24 |

| 256 | 0.14 | 0.13 | 0.12 | 0.57 |

| 512 | 0.43 | 0.42 | 0.31 | 1.7 |

| 1024 | 1.7 | 1.5 | 1.1 | 6.5 |

| 2048 | 7 | 6 | 5 | 26 |

| 4096 | 24 | 105 |

For FEM matrix assembly, the vectorized and optimized MATLAB code used by FEATool is actually just as fast as the FEM2D Fortran code (which already is a very good end efficient reference implementation). FEniCS is a little bit faster but this is to be expected since FEM2D and FEATool both use quadrilateral shape functions which are more expensive to assemble than the linear triangular ones used by FEniCS and Julia. The performance of Julia is unfortunately not very good here but this could be due to a non-optimized implementation. The SFEA code is not included since being stencil based FEM assembly costs virtually nothing.

Test 3 - Linear Solver for the Poisson Equation

| 1/h | FEATool | FEM2D | FEniCS | Julia FEM | SFEA |

|---|---|---|---|---|---|

| MATLAB Umfpack | Fortran GMG | Python/C++ PETSc | Julia CHOLMOD? | Fortran GMG | |

| 128 | 0.368 | 0.025 | 0.19 | 0.18 | 0.049 |

| 256 | 1.718 | 0.05 | 0.79 | 0.322 | 0.064 |

| 512 | 7.406 | 0.21 | 4.65 | 0.9 | 0.11 |

| 1024 | 47.646 | 1.1 | 34.1 | 3.4 | 0.16 |

| 2048 | 338.14 | 6 | - | 14.6 | 0.49 |

| 4096 | 63 | 83 | 2.9 | ||

| 8192 | 20 |

In the final test the Poisson equation is solved on the unit square with unit source term and zero homogeneous Dirichlet boundary conditions everywhere. The default linear solver of each code is used throughout the tests. FEM2D and SFEA employ a geometric multigrid solver, FEATool (MATLAB) uses Umfpack/SuiteSparse, and FEniCS uses PETSc. From the timings we can see that the Umfpack and PETSc direct sparse solvers performed about the same, with a slight advantage for PETSc (although failed for the 1/h=2048 grid). As the problem sizes increases we can see that the GMG solvers scale significantly better than the direct solvers, with the stencil based SFEA approach being about a magnitude faster (about 700 times faster than Umfpack on the 1/h=2048 grid with 4.2 million unknowns/degrees of freedom). It is not quite clear which solver Julia uses but due to the performance figures which are on par with the FEM2D Geometric MultiGrid (GMG) solver we suspect it detects that the problem can be solved faster with Cholesky factorization and uses a corresponding solver.

Summary

We have looked at how five different finite element implementations perform on three fundamental test cases. For sparse matrix vector operations the codes performed very similar with exception of the stencil based approach which was a magnitude faster.

For matrix assembly it was quite surprising just how good performance one can get from a properly vectorized and optimized MATLAB implementation, showing the same performance as a reference implementation in compiled Fortran.

Regarding the solvers Geometric MultiGrid (GMG) unsurprisingly beats direct solvers when grid sizes increased. Here also the stencil based approach was faster by a magnitude or more.

These few tests have shown that it seems entirely possible to write high-performance FEM simulation codes in almost any programming language. What seems more important for performance is the choice of data structures (sparse versus stencil) and algorithm (direct or iterative solvers). The outlier might be Julia for which it currently is not fully clear how it will perform, but due to being a new language it clearly does show some potential.

A follow up benchmark which tests the whole solution process can be found in the corresponding FEM benchmark comparison post.